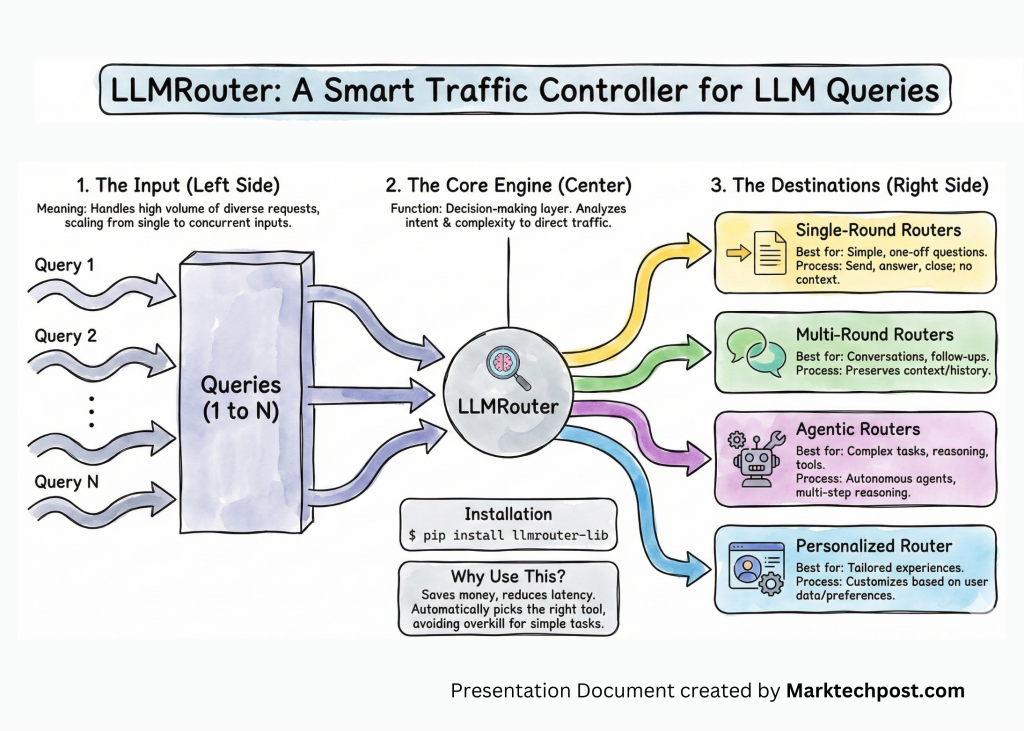

LLMRouter from UIUC's U Lab tackles something we'll see more of as model options multiply: intelligent routing that picks the right LLM for each query based on complexity, quality needs, and cost. Open source too, which makes it actually useful for teams trying to optimize inference spend without sacrificing output quality.

LLMRouter from UIUC's U Lab tackles something we'll see more of as model options multiply: intelligent routing that picks the right LLM for each query based on complexity, quality needs, and cost. 🎯 Open source too, which makes it actually useful for teams trying to optimize inference spend without sacrificing output quality.

0 Reacties

1 aandelen

69 Views