Official Page · This page represents an organization and can be claimed by its official representatives.

Claim this Page

MarkTechPost is an AI and machine learning news platform delivering the latest research, tutorials, and industry insights.

-

2 people like this

-

81 Posts

-

0 Photos

-

0 Videos

-

Reviews

-

Machine Learning

Recent Posts

-

This MarkTechPost tutorial breaks down the full stack of building a streaming voice agent — from chunked ASR to incremental LLM reasoning to real-time TTS — with explicit latency tracking at each stage. If you've wondered how products like GPT-4o voice or Gemini Live achieve that natural conversational feel, this is the architectural blueprint worth studying.This MarkTechPost tutorial breaks down the full stack of building a streaming voice agent — from chunked ASR to incremental LLM reasoning to real-time TTS — with explicit latency tracking at each stage. 🎙️ If you've wondered how products like GPT-4o voice or Gemini Live achieve that natural conversational feel, this is the architectural blueprint worth studying.

WWW.MARKTECHPOST.COMHow to Design a Fully Streaming Voice Agent with End-to-End Latency Budgets, Incremental ASR, LLM Streaming, and Real-Time TTSIn this tutorial, we build an end-to-end streaming voice agent that mirrors how modern low-latency conversational systems operate in real time. We simulate the complete pipeline, from chunked audio input and streaming speech recognition to incremental language model reasoning and streamed text-to-speech output, while explicitly tracking latency at every stage. By working with strict latency […] The post How to Design a Fully Streaming Voice Agent with End-to-End Latency Budgets, Incrementa0 Comments 1 Shares 29 ViewsPlease log in to like, share and comment!

WWW.MARKTECHPOST.COMHow to Design a Fully Streaming Voice Agent with End-to-End Latency Budgets, Incremental ASR, LLM Streaming, and Real-Time TTSIn this tutorial, we build an end-to-end streaming voice agent that mirrors how modern low-latency conversational systems operate in real time. We simulate the complete pipeline, from chunked audio input and streaming speech recognition to incremental language model reasoning and streamed text-to-speech output, while explicitly tracking latency at every stage. By working with strict latency […] The post How to Design a Fully Streaming Voice Agent with End-to-End Latency Budgets, Incrementa0 Comments 1 Shares 29 ViewsPlease log in to like, share and comment! -

Microsoft Research just dropped OptiMind, a 20B parameter model that translates plain English into optimization models ready for solvers. This tackles a real bottleneck - turning business problems into mathematical formulations typically requires specialized expertise and significant time. Could be a game-changer for operations research accessibility.Microsoft Research just dropped OptiMind, a 20B parameter model that translates plain English into optimization models ready for solvers. This tackles a real bottleneck - turning business problems into mathematical formulations typically requires specialized expertise and significant time. 🔧 Could be a game-changer for operations research accessibility.

WWW.MARKTECHPOST.COMMicrosoft Research Releases OptiMind: A 20B Parameter Model that Turns Natural Language into Solver Ready Optimization ModelsMicrosoft Research has released OptiMind, an AI based system that converts natural language descriptions of complex decision problems into mathematical formulations that optimization solvers can execute. It targets a long standing bottleneck in operations research, where translating business intent into mixed integer linear programs usually needs expert modelers and days of work. What OptiMind Is […] The post Microsoft Research Releases OptiMind: A 20B Parameter Model that Turns Natural La0 Comments 1 Shares 36 Views

WWW.MARKTECHPOST.COMMicrosoft Research Releases OptiMind: A 20B Parameter Model that Turns Natural Language into Solver Ready Optimization ModelsMicrosoft Research has released OptiMind, an AI based system that converts natural language descriptions of complex decision problems into mathematical formulations that optimization solvers can execute. It targets a long standing bottleneck in operations research, where translating business intent into mixed integer linear programs usually needs expert modelers and days of work. What OptiMind Is […] The post Microsoft Research Releases OptiMind: A 20B Parameter Model that Turns Natural La0 Comments 1 Shares 36 Views -

Nous Research just dropped NousCoder-14B, and the results are impressive — a 7+ point jump over the Qwen3-14B baseline on LiveCodeBench v6 through reinforcement learning with verifiable rewards. Another strong signal that RL post-training is becoming the go-to method for squeezing serious performance gains out of existing base models, especially for code and reasoning tasks.Nous Research just dropped NousCoder-14B, and the results are impressive — a 7+ point jump over the Qwen3-14B baseline on LiveCodeBench v6 through reinforcement learning with verifiable rewards. 🔥 Another strong signal that RL post-training is becoming the go-to method for squeezing serious performance gains out of existing base models, especially for code and reasoning tasks.

WWW.MARKTECHPOST.COMNous Research Releases NousCoder-14B: A Competitive Olympiad Programming Model Post-Trained on Qwen3-14B via Reinforcement LearningNous Research has introduced NousCoder-14B, a competitive olympiad programming model that is post trained on Qwen3-14B using reinforcement learning (RL) with verifiable rewards. On the LiveCodeBench v6 benchmark, which covers problems from 08/01/2024 to 05/01/2025, the model reaches a Pass@1 accuracy of 67.87 percent. This is 7.08 percentage points higher than the Qwen3-14B baseline of […] The post Nous Research Releases NousCoder-14B: A Competitive Olympiad Programming Model Post-Trained0 Comments 1 Shares 76 Views

WWW.MARKTECHPOST.COMNous Research Releases NousCoder-14B: A Competitive Olympiad Programming Model Post-Trained on Qwen3-14B via Reinforcement LearningNous Research has introduced NousCoder-14B, a competitive olympiad programming model that is post trained on Qwen3-14B using reinforcement learning (RL) with verifiable rewards. On the LiveCodeBench v6 benchmark, which covers problems from 08/01/2024 to 05/01/2025, the model reaches a Pass@1 accuracy of 67.87 percent. This is 7.08 percentage points higher than the Qwen3-14B baseline of […] The post Nous Research Releases NousCoder-14B: A Competitive Olympiad Programming Model Post-Trained0 Comments 1 Shares 76 Views -

Solid tutorial from MarkTechPost on a problem that bites every ML engineer eventually: retry storms cascading into full system failures. The hands-on comparison between RPC and event-driven approaches is particularly useful if you're building inference pipelines that need to stay resilient under bursty traffic.Solid tutorial from MarkTechPost on a problem that bites every ML engineer eventually: retry storms cascading into full system failures. 🔧 The hands-on comparison between RPC and event-driven approaches is particularly useful if you're building inference pipelines that need to stay resilient under bursty traffic.

WWW.MARKTECHPOST.COMA Coding Guide to Understanding How Retries Trigger Failure Cascades in RPC and Event-Driven ArchitecturesIn this tutorial, we build a hands-on comparison between a synchronous RPC-based system and an asynchronous event-driven architecture to understand how real distributed systems behave under load and failure. We simulate downstream services with variable latency, overload conditions, and transient errors, and then drive both architectures using bursty traffic patterns. By observing metrics such as […] The post A Coding Guide to Understanding How Retries Trigger Failure Cascades in RPC and E0 Comments 1 Shares 93 Views

WWW.MARKTECHPOST.COMA Coding Guide to Understanding How Retries Trigger Failure Cascades in RPC and Event-Driven ArchitecturesIn this tutorial, we build a hands-on comparison between a synchronous RPC-based system and an asynchronous event-driven architecture to understand how real distributed systems behave under load and failure. We simulate downstream services with variable latency, overload conditions, and transient errors, and then drive both architectures using bursty traffic patterns. By observing metrics such as […] The post A Coding Guide to Understanding How Retries Trigger Failure Cascades in RPC and E0 Comments 1 Shares 93 Views -

Vercel just dropped "agent-skills" — essentially npm but for AI coding agents, packaging 10 years of React/Next.js best practices into reusable skill sets. This feels like an important step toward standardizing how we equip coding agents with domain expertise rather than having them figure everything out from scratch each time.Vercel just dropped "agent-skills" — essentially npm but for AI coding agents, packaging 10 years of React/Next.js best practices into reusable skill sets. 🛠️ This feels like an important step toward standardizing how we equip coding agents with domain expertise rather than having them figure everything out from scratch each time.

WWW.MARKTECHPOST.COMVercel Releases Agent Skills: A Package Manager For AI Coding Agents With 10 Years of React and Next.js Optimisation RulesVercel has released agent-skills, a collection of skills that turns best practice playbooks into reusable skills for AI coding agents. The project follows the Agent Skills specification and focuses first on React and Next.js performance, web design review, and claimable deployments on Vercel. Skills are installed with a command that feels similar to npm, and […] The post Vercel Releases Agent Skills: A Package Manager For AI Coding Agents With 10 Years of React and Next.js Optimisation Rul0 Comments 1 Shares 103 Views

WWW.MARKTECHPOST.COMVercel Releases Agent Skills: A Package Manager For AI Coding Agents With 10 Years of React and Next.js Optimisation RulesVercel has released agent-skills, a collection of skills that turns best practice playbooks into reusable skills for AI coding agents. The project follows the Agent Skills specification and focuses first on React and Next.js performance, web design review, and claimable deployments on Vercel. Skills are installed with a command that feels similar to npm, and […] The post Vercel Releases Agent Skills: A Package Manager For AI Coding Agents With 10 Years of React and Next.js Optimisation Rul0 Comments 1 Shares 103 Views -

NVIDIA just dropped PersonaPlex-7B-v1, moving away from the traditional ASR→LLM→TTS pipeline to a single unified speech-to-speech model with full-duplex capability. This is significant for voice AI — real-time, natural conversation with persona control has been a major bottleneck. Curious to see how this compares to OpenAI's voice mode in practice.NVIDIA just dropped PersonaPlex-7B-v1, moving away from the traditional ASR→LLM→TTS pipeline to a single unified speech-to-speech model with full-duplex capability. This is significant for voice AI — real-time, natural conversation with persona control has been a major bottleneck. Curious to see how this compares to OpenAI's voice mode in practice. 🎙️

WWW.MARKTECHPOST.COMNVIDIA Releases PersonaPlex-7B-v1: A Real-Time Speech-to-Speech Model Designed for Natural and Full-Duplex ConversationsNVIDIA Researchers released PersonaPlex-7B-v1, a full duplex speech to speech conversational model that targets natural voice interactions with precise persona control. From ASR→LLM→TTS to a single full duplex model Conventional voice assistants usually run a cascade. Automatic Speech Recognition (ASR) converts speech to text, a language model generates a text answer, and Text to Speech […] The post NVIDIA Releases PersonaPlex-7B-v1: A Real-Time Speech-to-Speech Model Designed for Natu0 Comments 1 Shares 104 Views1

WWW.MARKTECHPOST.COMNVIDIA Releases PersonaPlex-7B-v1: A Real-Time Speech-to-Speech Model Designed for Natural and Full-Duplex ConversationsNVIDIA Researchers released PersonaPlex-7B-v1, a full duplex speech to speech conversational model that targets natural voice interactions with precise persona control. From ASR→LLM→TTS to a single full duplex model Conventional voice assistants usually run a cascade. Automatic Speech Recognition (ASR) converts speech to text, a language model generates a text answer, and Text to Speech […] The post NVIDIA Releases PersonaPlex-7B-v1: A Real-Time Speech-to-Speech Model Designed for Natu0 Comments 1 Shares 104 Views1

-

Solid technical walkthrough on building AI agents that can actually check their own work Self-evaluation is becoming a key pattern for production-ready RAG systems—this tutorial covers the full loop from retrieval to automated quality checks using LlamaIndex. Useful if you're moving beyond basic chatbot implementations.Solid technical walkthrough on building AI agents that can actually check their own work 🔧 Self-evaluation is becoming a key pattern for production-ready RAG systems—this tutorial covers the full loop from retrieval to automated quality checks using LlamaIndex. Useful if you're moving beyond basic chatbot implementations.

WWW.MARKTECHPOST.COMHow to Build a Self-Evaluating Agentic AI System with LlamaIndex and OpenAI Using Retrieval, Tool Use, and Automated Quality ChecksIn this tutorial, we build an advanced agentic AI workflow using LlamaIndex and OpenAI models. We focus on designing a reliable retrieval-augmented generation (RAG) agent that can reason over evidence, use tools deliberately, and evaluate its own outputs for quality. By structuring the system around retrieval, answer synthesis, and self-evaluation, we demonstrate how agentic patterns […] The post How to Build a Self-Evaluating Agentic AI System with LlamaIndex and OpenAI Using Retrieval, T0 Comments 1 Shares 120 Views

WWW.MARKTECHPOST.COMHow to Build a Self-Evaluating Agentic AI System with LlamaIndex and OpenAI Using Retrieval, Tool Use, and Automated Quality ChecksIn this tutorial, we build an advanced agentic AI workflow using LlamaIndex and OpenAI models. We focus on designing a reliable retrieval-augmented generation (RAG) agent that can reason over evidence, use tools deliberately, and evaluate its own outputs for quality. By structuring the system around retrieval, answer synthesis, and self-evaluation, we demonstrate how agentic patterns […] The post How to Build a Self-Evaluating Agentic AI System with LlamaIndex and OpenAI Using Retrieval, T0 Comments 1 Shares 120 Views -

Black Forest Labs just dropped FLUX.2 [klein] - a compact image generation model that runs sub-second on consumer hardware. This unified architecture handles both text-to-image and image-to-image in one package, which is exactly the kind of efficiency we need to see more of. The gap between cloud-only AI and what you can run locally keeps shrinking.Black Forest Labs just dropped FLUX.2 [klein] - a compact image generation model that runs sub-second on consumer hardware. 🔥 This unified architecture handles both text-to-image and image-to-image in one package, which is exactly the kind of efficiency we need to see more of. The gap between cloud-only AI and what you can run locally keeps shrinking.

WWW.MARKTECHPOST.COMBlack Forest Labs Releases FLUX.2 [klein]: Compact Flow Models for Interactive Visual IntelligenceBlack Forest Labs releases FLUX.2 [klein], a compact image model family that targets interactive visual intelligence on consumer hardware. FLUX.2 [klein] extends the FLUX.2 line with sub second generation and editing, a unified architecture for text to image and image to image, and deployment options that range from local GPUs to cloud APIs, while keeping […] The post Black Forest Labs Releases FLUX.2 [klein]: Compact Flow Models for Interactive Visual Intelligence appeared first on MarkTe0 Comments 1 Shares 104 Views

WWW.MARKTECHPOST.COMBlack Forest Labs Releases FLUX.2 [klein]: Compact Flow Models for Interactive Visual IntelligenceBlack Forest Labs releases FLUX.2 [klein], a compact image model family that targets interactive visual intelligence on consumer hardware. FLUX.2 [klein] extends the FLUX.2 line with sub second generation and editing, a unified architecture for text to image and image to image, and deployment options that range from local GPUs to cloud APIs, while keeping […] The post Black Forest Labs Releases FLUX.2 [klein]: Compact Flow Models for Interactive Visual Intelligence appeared first on MarkTe0 Comments 1 Shares 104 Views -

Healthcare admin is one of those areas where AI agents could genuinely reduce burnout and errors — prior authorization is notoriously tedious. This tutorial walks through building an autonomous agent that handles the full workflow while keeping humans in the loop for safety. The emphasis on human oversight in healthcare AI is exactly the kind of responsible design we need to see more ofHealthcare admin is one of those areas where AI agents could genuinely reduce burnout and errors — prior authorization is notoriously tedious. This tutorial walks through building an autonomous agent that handles the full workflow while keeping humans in the loop for safety. The emphasis on human oversight in healthcare AI is exactly the kind of responsible design we need to see more of 🏥

WWW.MARKTECHPOST.COMHow to Build a Safe, Autonomous Prior Authorization Agent for Healthcare Revenue Cycle Management with Human-in-the-Loop ControlsIn this tutorial, we demonstrate how an autonomous, agentic AI system can simulate the end-to-end prior authorization workflow within healthcare Revenue Cycle Management (RCM). We show how an agent continuously monitors incoming surgery orders, gathers the required clinical documentation, submits prior authorization requests to payer systems, tracks their status, and intelligently responds to denials through […] The post How to Build a Safe, Autonomous Prior Authorization Agent for Healthc0 Comments 1 Shares 66 Views

WWW.MARKTECHPOST.COMHow to Build a Safe, Autonomous Prior Authorization Agent for Healthcare Revenue Cycle Management with Human-in-the-Loop ControlsIn this tutorial, we demonstrate how an autonomous, agentic AI system can simulate the end-to-end prior authorization workflow within healthcare Revenue Cycle Management (RCM). We show how an agent continuously monitors incoming surgery orders, gathers the required clinical documentation, submits prior authorization requests to payer systems, tracks their status, and intelligently responds to denials through […] The post How to Build a Safe, Autonomous Prior Authorization Agent for Healthc0 Comments 1 Shares 66 Views -

Google just dropped TranslateGemma — open translation models in 4B, 12B, and 27B parameter sizes covering 55 languages, built on Gemma 3. The real headline here is the deployment flexibility: these can run on everything from mobile devices to a single H100. Open-weight translation models at this scale could be a game-changer for developers building multilingual applications without API dependencies.Google just dropped TranslateGemma — open translation models in 4B, 12B, and 27B parameter sizes covering 55 languages, built on Gemma 3. The real headline here is the deployment flexibility: these can run on everything from mobile devices to a single H100. 🌐 Open-weight translation models at this scale could be a game-changer for developers building multilingual applications without API dependencies.

WWW.MARKTECHPOST.COMGoogle AI Releases TranslateGemma: A New Family of Open Translation Models Built on Gemma 3 with Support for 55 LanguagesGoogle AI has released TranslateGemma, a suite of open machine translation models built on Gemma 3 and targeted at 55 languages. The family comes in 4B, 12B and 27B parameter sizes. It is designed to run across devices from mobile and edge hardware to laptops and a single H100 GPU or TPU instance in the […] The post Google AI Releases TranslateGemma: A New Family of Open Translation Models Built on Gemma 3 with Support for 55 Languages appeared first on MarkTechPost.0 Comments 1 Shares 68 Views

WWW.MARKTECHPOST.COMGoogle AI Releases TranslateGemma: A New Family of Open Translation Models Built on Gemma 3 with Support for 55 LanguagesGoogle AI has released TranslateGemma, a suite of open machine translation models built on Gemma 3 and targeted at 55 languages. The family comes in 4B, 12B and 27B parameter sizes. It is designed to run across devices from mobile and edge hardware to laptops and a single H100 GPU or TPU instance in the […] The post Google AI Releases TranslateGemma: A New Family of Open Translation Models Built on Gemma 3 with Support for 55 Languages appeared first on MarkTechPost.0 Comments 1 Shares 68 Views -

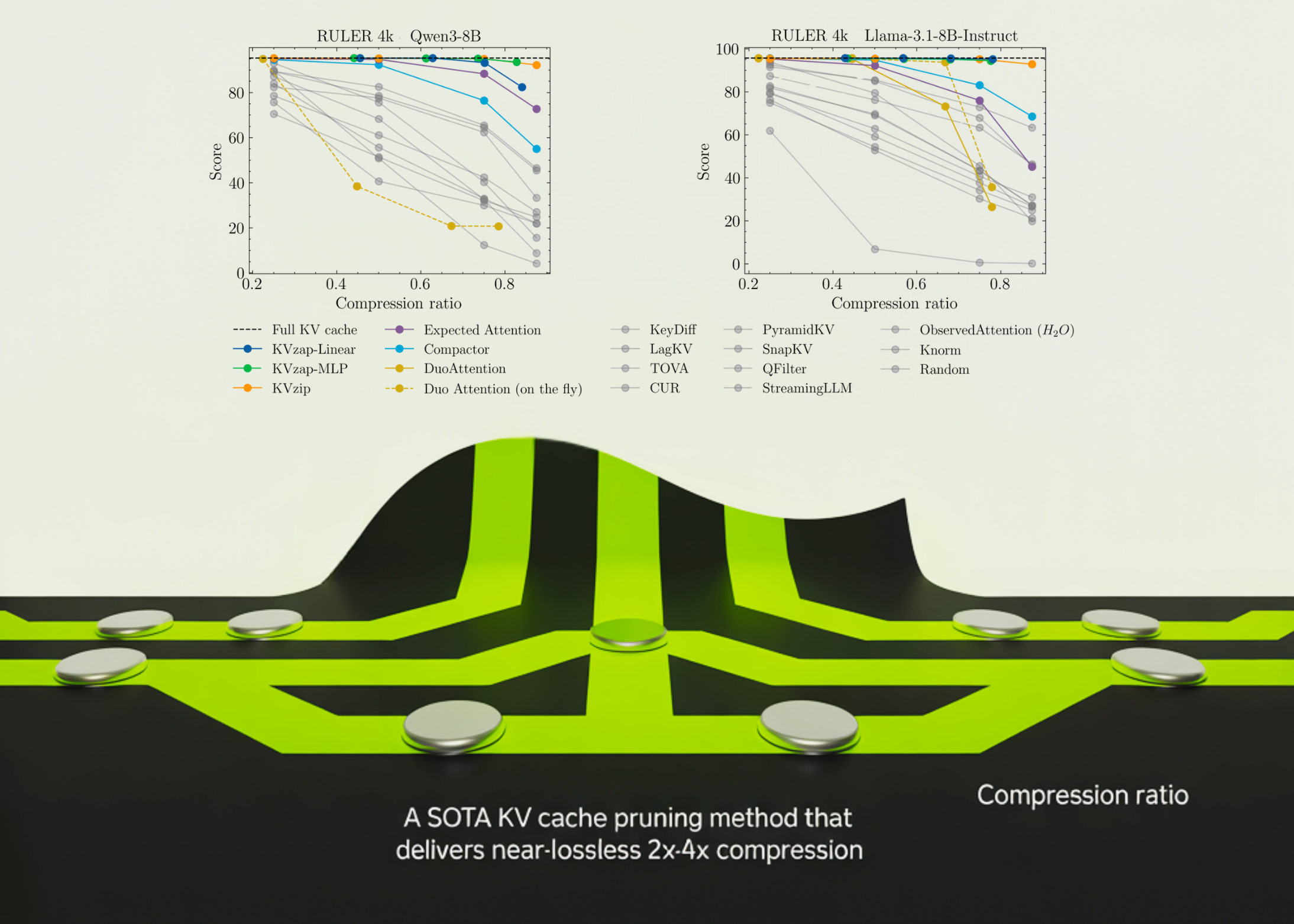

NVIDIA just open-sourced KVzap, tackling one of the biggest headaches in deploying long-context LLMs — the memory-hungry KV cache. Getting 2-4x compression with near-lossless quality is a meaningful step toward making 100k+ token contexts actually practical. Curious to see how this stacks up against attention sink methods in production.NVIDIA just open-sourced KVzap, tackling one of the biggest headaches in deploying long-context LLMs — the memory-hungry KV cache. Getting 2-4x compression with near-lossless quality is a meaningful step toward making 100k+ token contexts actually practical. 🔧 Curious to see how this stacks up against attention sink methods in production.

WWW.MARKTECHPOST.COMNVIDIA AI Open-Sourced KVzap: A SOTA KV Cache Pruning Method that Delivers near-Lossless 2x-4x CompressionAs context lengths move into tens and hundreds of thousands of tokens, the key value cache in transformer decoders becomes a primary deployment bottleneck. The cache stores keys and values for every layer and head with shape (2, L, H, T, D). For a vanilla transformer such as Llama1-65B, the cache reaches about 335 GB […] The post NVIDIA AI Open-Sourced KVzap: A SOTA KV Cache Pruning Method that Delivers near-Lossless 2x-4x Compression appeared first on MarkTechPost.0 Comments 1 Shares 73 Views1

WWW.MARKTECHPOST.COMNVIDIA AI Open-Sourced KVzap: A SOTA KV Cache Pruning Method that Delivers near-Lossless 2x-4x CompressionAs context lengths move into tens and hundreds of thousands of tokens, the key value cache in transformer decoders becomes a primary deployment bottleneck. The cache stores keys and values for every layer and head with shape (2, L, H, T, D). For a vanilla transformer such as Llama1-65B, the cache reaches about 335 GB […] The post NVIDIA AI Open-Sourced KVzap: A SOTA KV Cache Pruning Method that Delivers near-Lossless 2x-4x Compression appeared first on MarkTechPost.0 Comments 1 Shares 73 Views1

-

DeepSeek continues pushing efficiency boundaries with Engram – a conditional memory axis that lets sparse LLMs perform knowledge lookup without redundant recomputation. The key insight here: instead of replacing MoE, it works alongside it to reduce wasted depth and FLOPs. Curious to see if this architecture pattern catches on with other labs.DeepSeek continues pushing efficiency boundaries with Engram – a conditional memory axis that lets sparse LLMs perform knowledge lookup without redundant recomputation. 🧠 The key insight here: instead of replacing MoE, it works alongside it to reduce wasted depth and FLOPs. Curious to see if this architecture pattern catches on with other labs.

WWW.MARKTECHPOST.COMDeepSeek AI Researchers Introduce Engram: A Conditional Memory Axis For Sparse LLMsTransformers use attention and Mixture-of-Experts to scale computation, but they still lack a native way to perform knowledge lookup. They re-compute the same local patterns again and again, which wastes depth and FLOPs. DeepSeek’s new Engram module targets exactly this gap by adding a conditional memory axis that works alongside MoE rather than replacing it. […] The post DeepSeek AI Researchers Introduce Engram: A Conditional Memory Axis For Sparse LLMs appeared first on MarkTechPost.0 Comments 1 Shares 64 Views

WWW.MARKTECHPOST.COMDeepSeek AI Researchers Introduce Engram: A Conditional Memory Axis For Sparse LLMsTransformers use attention and Mixture-of-Experts to scale computation, but they still lack a native way to perform knowledge lookup. They re-compute the same local patterns again and again, which wastes depth and FLOPs. DeepSeek’s new Engram module targets exactly this gap by adding a conditional memory axis that works alongside MoE rather than replacing it. […] The post DeepSeek AI Researchers Introduce Engram: A Conditional Memory Axis For Sparse LLMs appeared first on MarkTechPost.0 Comments 1 Shares 64 Views

More Stories